Virtualization for Future of Cellular Network

What is Virtualization?

Virtualization in computing is typically running multiple instances of operating systems on one computer. No longer does the OS/Hardware have a 1:1 relationship, now the hardware is shared across multiple operating systems. Each instance is theoretically unaware it’s sharing hardware with other operating systems, and each instance is isolated from each other.

This is useful to several ends: Depending on your business model, you may want to give your clients a complete OS environment but you don’t have the hardware to give them each a dedicated machine. When sharing a system, it keeps everyone out of each other’s business. If one client crashes his environment, typically it won’t take down the whole computer and all the other clients with it. You can do this just to isolate software, like databases, web servers, or security applications, which really don’t like sharing software environments. Finally, computers typically spend most of their time idling, so the more environments you pack on a machine, the more likely enough clients will be busy to utilize all the available resources.

There are multiple ways to virtualize. Xen is a derivative of the Linux kernel that is a sort of operating system for hosting operating systems. It does nothing else. Another way is virtualization software like Virtual Box which can run on your desktop and the hosted OSes run in a window. Ever want to try Linux or some other eccentric OS? You can do it this way. Another way is through the Linux Kernel using kernel namespaces - this is a way of running one OS instance but isolating software environments. Docker is one such program that capitalizes on this scheme.

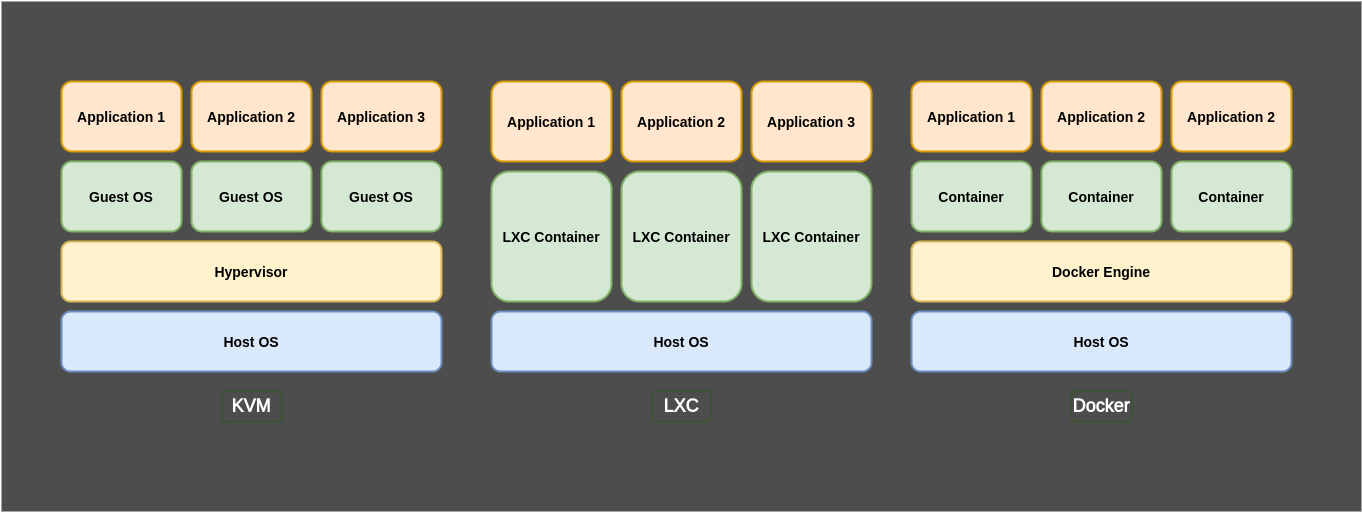

The different ways to virtualize meet different needs and deal with overhead - computing resources consumed just in managing the virtualization - and come with different compromises. Some hardware supports virtualization directly for a performance boost - typically this can be found in top end and server CPUs.

Cloud computing is internet based computing where a massive pool of shared resources are available to clients on demand. You don’t know who owns these computers, you don’t know how many or where they are, you don’t care - you need compute power, and through some service provider, you can buy it.

The advantages are that you get exactly what you want at a competitive price, you have high availability (many types of hardware and environment faults should be transparent to you), and you don’t have to worry about ownership, upgrades, maintenance, staff, etc. The downsides include the lack of ownership and security. You don’t own these computers, you don’t know who is looking at your stuff, and your stuff can be held for ransom by the provider in the case of a dispute. If your data is sensitive, do you trust a 3rd party?

What are the options?

General Structure

Traditional Virtualization : Hypervisor

1. QEMU

QEMU is a type 2 hypervisor that runs within user space and performs virtual hardware emulation.

2. KVM (Kernel-Based Virtual Machine)

KVM is a type 1 hypervisor that runs in kernel space.

3. VMWare

KVM is a type 1 hypervisor that runs in kernel space.

4. VirtualBox

KVM is a type 1 hypervisor that runs in kernel space.

Modern Container Based Virtualization

LXC (Linux Containers)

LXC

LXC is an operting-system level virtualization method for runing multitple isolated Linux systems on a control hos using a single Linux Kernel. The Linux Kernel provides the cgroups functionality that allows limitation and prioritization of resources without the need for starting any virtual machines, and also the namespace isolation functionaliyyy that allows commplete isolation of an application’s view of the operating envirsdasdonment, including process tres, networking, user IDS and mounted file systems. LXC combines the kernel’s csgroups and support for isolated namespaces to provide an isolated environment for applications. Earlyy version of Docker used LXC as the container execution driver, though it was dropped since Docker v1.10

Docker

Docker, linux interface

Podman

A demonless, open source, Linux native tool designed to make it easy to find, run, build, share and deploy applications using Open Containers Initiative (OCI)

A container doesn’t virtualize hardware, and runs on the same kernel as the host OS. It provides isolation from the host OS, and from other containers, without the overhead of running a full VM, virtualizing hardware, etc.

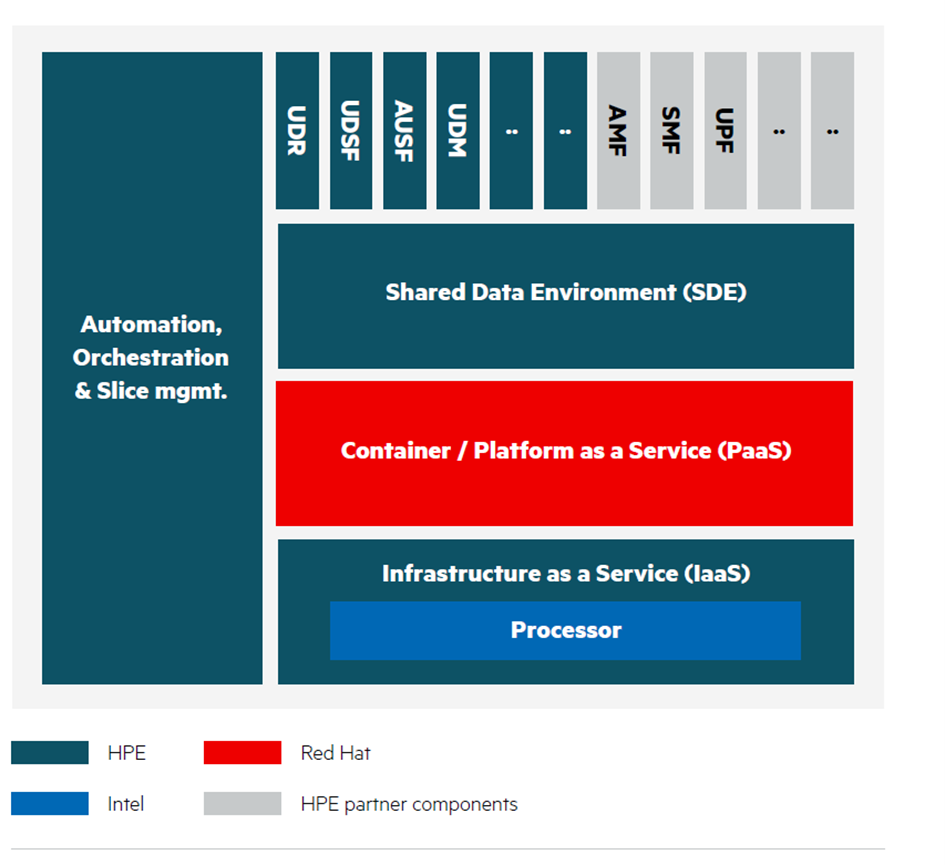

How cellular network can utilize these?

Network Function Virtualization

Network Function Virtualization (NFV) is being touted as a key component of 5G technology, with its ability to offload network functions into software that runs on industry-standard hardware can be managed from anywhere. Researcher at Indian Institute of Technology, Bombay, are expolring the benefits andd suitability of novel kernel bypass stacks as a replacement of Linux Kernel stack within more complex and realistic network functions of mobile telecommunications networks.

Key points:

- Several kernel bypass stacks have shown promise as substitutes to Linux kernel stack, but there’s little evidence as to their effectiveness in core network functions such as the Access Mobility Function (AMF) and the Authentication Service Function (AUSF).

- Novel network stacks use lightweight TCP/IP processing and are not always RFC complaint.

The benefits of NFV are it is much cheaper to develop and deploy software compared to physical hardware applicances. Further, software running on the cloud can be elastically scaled on demand by throwing in more compute and storage. However, there is a catch. Although NFV gives us all of these benefits, now with network funcions running in software, it becomes much more difficult to ensure good performance that meets various service level objectices (SLOs).

While some network functions only access and modify packet headers, some other network functions are fairly complex and run at the application layer of network stack. Such network functions have to make use of abstractions such as network sockets that are provided by other software layers like the Linux Kernel networking stack. As a result, the performance of such network functions not only depends on the network funcion implementation itself, but also on the underlying software on which it runs.

IBM : 5G Cloud stack

Linux Kernel Utilization for NFV

The kernel is a section of code within a computer’s operating system that has the most amount of privilage and has direct access to the hardware. When a user has a program that needs to access the hardware, say to read from .

The linux kernel is the lowest level of software that can interface with computer hardware. The kernel manages OS resources, making sure there is enough memory available for applications to run, optimizing processor usage, and avoiding system deadlocks caused by competing application demands.

The kernel has 4 main jobs:

- Memory management: Keep track of how much memory is used to store what, and where.

- Process management: Determine which processes can use t he central processing unit (CPU), when, and for how long

- Device drivers: Aact as mediator/interpreter between the hardware and processes

- System calls and security: Receive requests for service from processes

The kernel, if implemented properly, is invisible to the user, working in its own little world known as kernel space, where it allocates memory and keeps track of where everything is stored. What user sees are known as user space. These applications interact with the kernel through system call interface (SCI).

There are several issues with the Linux kernel’s networking stack, especially when processing large volumes of network data.

- The network stack copies packets multiple times, once from the device to kernel memory, and again from the kernel to user memory.

- Every network input/output operation incurs overheads due to system calls and interrupts procesing.

- The network stack procesing can span multiple CPU cores, causing lock contention on variious kernel data structure.

- The network stack is also built atop the Virtual File System (VFS), leading to overheads unerlated to the network I/O altogether.

The popular argument today is that the kernel is not supposed to be on the data path of high-speed networking interface cards, and the best solution is to simply bypass it. Several kernel bypass mechanism like netmap and intel’s Data Plane Development Kit (DPDK) are commonly used to build high-performance software network functions.

References :